It feels like a classical paradox: How do you see the invisible? But for modern astronomers, it is a very real challenge: How do you measure dark matter, which by definition emits no light?

The answer: You see how it impacts things that you can see. In the case of dark matter, astronomers watch how light from distant galaxies bends around it.

An international team of astrophysicists and cosmologists have spent the past year teasing out the secrets of this elusive material, using sophisticated computer simulations and the observations from the one of the most powerful astronomical cameras in the world, the Hyper Suprime-Cam (HSC). The team is led by astronomers from Princeton University and the astronomical communities of Japan and Taiwan, using data from the first three years of the HSC sky survey, a wide-field imaging survey carried out with the 8.2-meter Subaru telescope on the summit of Maunakea in Hawai’i. Subaru is operated by the National Astronomical Observatory of Japan; its name is the Japanese word for the cluster of stars we call the Pleiades.

The team presented their findings at a webinar attended by more than 200 people, and they will share their work at the “Future Science with CMB x LSS” conference in Japan.

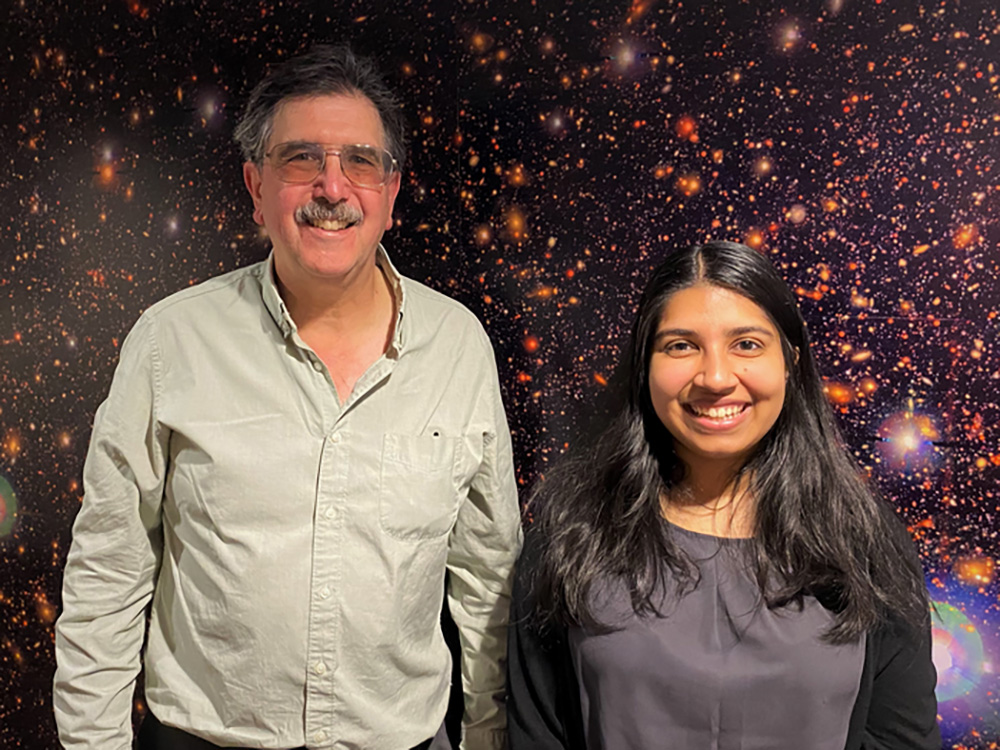

“Our overall goal is to measure some of the most fundamental properties of our universe,” said Roohi Dalal, a graduate student in astrophysics at Princeton. “We know that dark energy and dark matter make up 95% of our universe, but we understand very little about what they actually are and how they’ve evolved over the history of the universe. Clumps of dark matter distort the light of distant galaxies through weak gravitational lensing, a phenomenon predicted by Einstein’s General Theory of Relativity. This distortion is a really, really small effect; the shape of a single galaxy is distorted by an imperceptible amount. But when we make that measurement for 25 million galaxies, we’re able to measure the distortion with quite high precision.”

To jump to the punchline: The team has measured a value for the “clumpiness” of the universe’s dark matter (known to cosmologists as “S8”) of 0.776, which aligns with values that other gravitational lensing surveys have found in looking at the relatively recent universe — but it does not align with the value of 0.83 derived from the Cosmic Microwave Background, which dates back to the universe’s origins.

The gap between these two values is small, but as more and more studies confirm each of the two values, it doesn’t appear to be accidental. The other possibilities are that there’s some as-yet unrecognized error or mistake in one of these two measurements or the standard cosmological model is incomplete in some interesting way.

“We’re still being fairly cautious here,” said Michael Strauss, chair of Princeton’s Department of Astrophysical Sciences and one of the leaders of the HSC team. “We’re not saying that we’ve just discovered that modern cosmology is all wrong, because, as Roohi has emphasized, the effect that we’re measuring is a very subtle one. Now, we think we’ve done the measurement right. And the statistics show that there’s only a one in 20 chance that it’s just due to chance, which is compelling but not completely definitive. But as we in the astronomy community come to the same conclusion over multiple experiments, as we keep on doing these measurements, perhaps we’re finding that it’s real.”

Hiding and uncovering the data

The idea that some change is needed in the standard cosmological model, that there is some fundamental piece of cosmology yet to be discovered, is a deliciously enticing one for some scientists.

“We are human beings, and we do have preferences. That’s why we do what we call a ‘blinded’ analysis,” Strauss said. “Scientists have become self-aware enough to know that we will bias ourselves, no matter how careful we are, unless we carry out our analysis without allowing ourselves to know the results until the end. For me, I would love to really find something fundamentally new. That would be truly exciting. But because I am prejudiced in that direction, we want to be very careful not to let that influence any analysis that we do.”

To protect their work from their biases, they quite literally hid their results from themselves and their colleagues — month after month after month.

“I worked on this analysis for a year and didn’t get to see the values that were coming out,” said Dalal.

The team even added an extra obfuscating layer: they ran their analyses on three different galaxy catalogs, one real and two with numerical values offset by random values.

“We didn’t know which of them was real, so even if someone did accidentally see the values, we wouldn’t know if the results were based on the real catalog or not,” she said.

On February 16, the international team gathered together on Zoom — in the evening in Princeton, in the morning in Japan and Taiwan — for the “unblinding.”

“It felt like a ceremony, a ritual, that we went through,” Strauss said. “We unveiled the data, and ran our plots, immediately we saw it was great. Everyone went, ‘Oh, whew!’ and everyone was very happy.”

Dalal and her roommate popped a bottle of champagne that night.

A huge survey with the world’s largest telescope camera

HSC is the largest camera on a telescope of its size in the world, a mantle it will hold until the Vera C. Rubin Observatory currently under construction in the Chilean Andes, begins the Legacy Survey of Space and Time (LSST) in late 2024. In fact, the raw data from HSC is processed with the software designed for LSST. “It is fascinating to see that our software pipelines are able to handle such large quantities of data well ahead of LSST,” said Andrés Plazas, an associate research scholar at Princeton.

The survey that the research team used covers about 420 square degrees of the sky, about the equivalent of 2000 full moons. It’s not a single contiguous chunk of sky, but split among six different pieces, each about the size that you could cover with an outstretched fist. The 25 million galaxies they surveyed are so distant that instead of seeing these galaxies as they are today, the HSC recorded how they were billions of years ago.

Each of these galaxies glows with the fires of tens of billions of suns, but because they are so far away, they are extremely faint, as much as 25 million times fainter than the faintest stars we can see with the naked eye.

“It is extremely exciting to see these results from HSC collaboration, especially as this data is closest to what we expect from Rubin Observatory, which the community is working towards together,” said cosmologist Alexandra Amon, a Senior Kavli Fellow at Cambridge University and a senior researcher at Trinity College, who was not involved in this research. “Their deep survey makes for beautiful data. For me, it is intriguing that HSC, like the other independent weak lensing surveys, point to a low value for S8 — it’s important validation, and exciting that these tensions and trends force us to pause and think about what that data is telling us about our Universe!”

The standard cosmological model

The standard model of cosmology is “astonishingly simple” in some ways, explained Andrina Nicola of the University of Bonn, who advised Dalal on this project when she was a postdoctoral scholar at Princeton. The model posits that the universe is made up of only four basic constituents: ordinary matter (atoms, mostly hydrogen and helium), dark matter, dark energy and photons.

According to the standard model, the universe has been expanding since the Big Bang 13.8 billion years ago: it started out almost perfectly smooth, but the pull of gravity on the subtle fluctuations in the universe has caused structure — galaxies enveloped in dark matter clumps — to form. In the present-day universe, the relative contributions of ordinary matter, dark matter, dark energy are about 5%, 25% and 70%, plus a tiny contribution from photons.

The standard model is defined by only a handful of numbers: the expansion rate of the universe; a measure of how clumpy the dark matter is (S8); the relative contributions of the constituents of the universe (the 5%, 25%, 70% numbers above); the overall density of the universe; and a technical quantity describing how the clumpiness of the universe on large scales relates to that on small scales.

“And that’s basically it!” Strauss said. “We, the cosmological community, have converged on this model, which has been in place since the early 2000s.”

Cosmologists are eager to test this model by constraining these numbers in various ways, such as by observing the fluctuations in the Cosmic Microwave Background (which in essence is the universe’s baby picture, capturing how it looked after its first 400,000 years), modeling the expansion history of the universe, measuring the clumpiness of the universe in the relatively recent past, and others.

“We’re confirming a growing sense in the community that there is a real discrepancy between the measurement of clumping in the early universe (measured from the CMB) and that from the era of galaxies, ‘only’ 9 billion years ago,” said Arun Kannawadi, an associate research scholar at Princeton who was involved in the analysis.

Five lines of attack

Dalal’s work does a so-called Fourier-space analysis; a parallel real-space analysis was led by Xiangchong Li of Carnegie Mellon University, who worked in close collaboration with Rachel Mandelbaum, who completed her physics A.B. in 2000 and her Ph.D. in 2006, both from Princeton. A third analysis, a so-called 3×2-point analysis, takes a different approach of measuring the gravitational lensing signal around individual galaxies, to calibrate the amount of dark matter associated with each galaxy. That analysis was led by Sunao Sugiyama of the University of Tokyo, Hironao Miyatake (a former Princeton postdoctoral fellow) of Nagoya University and Surhud More of the Inter-University Centre for Astronomy and Astrophysics in Pune, India.

These five sets of analyses each use the HSC data to come to the same conclusion about S8.

Doing both the real-space analysis and the Fourier-space analysis “was sort of a sanity check,” said Dalal. She and Li worked closely to coordinate their analyses, using blinded data. Any discrepancies between those two would say that the researchers’ methodology was wrong. “It would tell us less about astrophysics and more about how we might have screwed up,” Dalal said.

“We didn’t know until the unblinding that two results were bang-on identical,” she said. “It felt miraculous.”

Sunao added: “Our 3×2-point analysis combines the weak lensing analysis with the clustering of galaxies. Only after unblinding did we know that our results were in beautiful agreement with those of Roohi and Xiangchong. The fact that all these analyses are giving the same answer gives us confidence that we’re doing something right!”

Learn more at https://hsc-release.mtk.nao.ac.jp/doc/index.php/. This research will be presented at “Future Science with CMB x LSS,” a conference running from April 10-14 at Yukawa Institute for Theoretical Physics, Kyoto University. This research was supported by the National Science Foundation Graduate Research Fellowship Program (DGE-2039656); the National Astronomical Observatory of Japan; the Kavli Institute for the Physics and Mathematics of the Universe; the University of Tokyo; the High Energy Accelerator Research Organization (KEK); the Academia Sinica Institute for Astronomy and Astrophysics in Taiwan; Princeton University; the FIRST program from the Japanese Cabinet Office; the Ministry of Education, Culture, Sports, Science and Technology (MEXT); the Japan Society for the Promotion of Science; the Japan Science and Technology Agency; the Toray Science Foundation; and the Vera C. Rubin Observatory.